According to an unofficial consensus, the birth of artificial intelligence as an independent research project can be dated to the summer of 1956, when John McCarthy at Dartmouth College, where he belonged to the Mathematical Department, was able to persuade the Rockefeller Foundation to finance an investigation ” The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it”. In addition to McCarthy (who was a professor at Stanford University until 2000 and is responsible for the coining of the term “artificial intelligence”), several other participants took part in the historical workshop at Dartmouth: Marvin Minsky (former professor at Stanford University), Claude Shannon (inventor of information theory); Herbert Simon (Nobel Prize winner in economics); Arthur Samuel (developer of the first chess computer program at world champion level); furthermore half a dozen experts from science and industry, who dreamed that it might be possible to produce a machine for coping with human tasks, which, according to the previous opinion, require intelligence.

The Manifesto of Dartmouth (written at the dawn of the AI age) is both irritating and blurred. It is not clear whether the conference participants believed that one-day machines would actually think or just behave as if they could imagine. Both possible interpretations allow the word “simulate.” Written and oral reports on the meeting support both positions. Some participants were concerned with studies of networks of artificial neurons which, they hoped, could in some sense recreate the biological neurons of the brain, while others were more interested in the production of programs that should behave intelligently, regardless of whether the principles underlying the plans bear any resemblance to the functioning of the human brain. This gap between the paradigms

Thinking = the way the brain does it,

and

Thinking = the results that the brain produces.

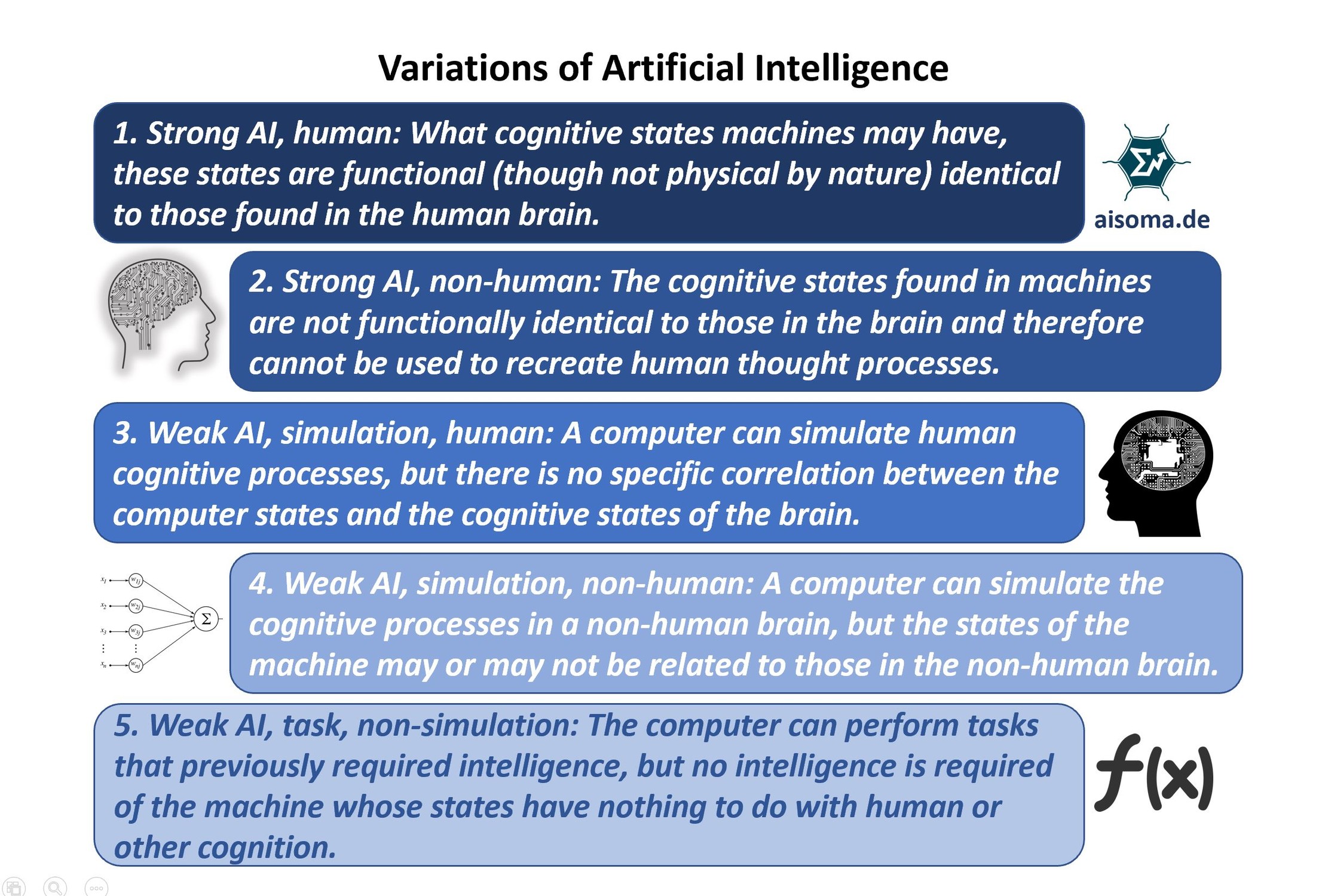

The AI community is divided into the so-called strong and weak AI school.

To better understand what the question of whether machines can think is about, it may prove useful to differentiate the dichotomy “strong” and “weak” a little and to compare it with a scheme suggested by the philosopher Keith Gunderson. He distinguishes between the following AI “games”:

It is essential that we clarify the difference between the functionally equivalent and physically identical pairs of states. The easiest way to tell the difference is to imagine that we are dealing with a correspondence between, say, the cognitive states C1, C2, C3, and three machine states M1, M2 and M3. These states are clearly not physically identical, because the machine states are merely patterns of the numbers 0 and 1 on a silicon chip, while the cognitive states are coupled to the chemical concentrations and electrical patterns in a brain. However, the two state sequences would be functionally equivalent if, for example, we found that the machine pattern M1->M3->M2 corresponds to the cognitive pattern C2->C3->C1 each time. In this case, we could say that the states M3 and C3 are functionally identical because they play the same functional role in the respective sequences; i.e., they are always the mean state of the three-part series.

As far as real machine thinking is concerned, the first category in the above overview is the only important one: strong AI, human. Everything else, although certainly technically attractive and economically rewarding, lacks any real intellectual or philosophical temptation, at least as far as the question of machines of thought is concerned. This may surprise some given the massive hype that the media (and various self-service representatives of the AI Guild) have recently been organizing. They praise the wonders of the so-called expert systems developed in the AI labs of Massachusetts, London and Tokyo, enthusiastically describe the robots and programs waiting around the corner to fulfill all our wishes (or take away our jobs), and demand that more money is thrown out of the window. Not to mention the speculation of the capitalists/entrepreneurs and their computer-fixed allies, who are romping about everywhere trying to capitalize on people’s credulity in the mindset of machines. This deplorable situation can be traced back to a handful of programs that demonstrate some progress in the last and intellectually not particularly productive category: weak AI, abandonment, non-simulation.

Progress in this area says as much about thinking as the flight mechanism of birds about the development of the aircraft. So from now on, when we talk about cognitive states in machines, we are referring to the types of rules described in our first category: strong AI, human.

Of course, no one has yet put forward an unassailable argument to the effect that the inner states of an appropriately programmed digital computer are functionally identical to the rules of consciousness when they covetously eye a luxury car, examine the seemingly endless menu in a Chinese restaurant, check their account balance, enjoy a Bach fugue, or devote themselves to one of the myriads of other activities that we call thinking in a certain sense.

In the short term, AI will continue to be dominated by point 5. The most recent example is the victory of an expert system against one of the world’s best Go players. (Consider the incredibly high number of 2.08 x 10 to the power of 170 different positions on a 19×19 Go board. In comparison, chess has “only “10 to the power of 43 different positions. The number of atoms in the universe is about 10 to the power of 80!). The following years (3-10) will be strongly dominated by points 4 and 3. It will come so far that we cannot always say with certainty whether we are dealing with real “consciousness” or whether it is just a brilliant simulation that is taking place right in front of us. The progressive development in the field of robotics will do the rest. AI embedded in a quasi-human body will certainly have more “effect” than text output on a screen or speech from a device such as a smartphone.

Source: https://www.linkedin.com/pulse/variation-artificial-intelligence-murat-durmus